Type

Personal project with collaborator: Faye Fang (UX designer)

Role

Prototyper

Project summary

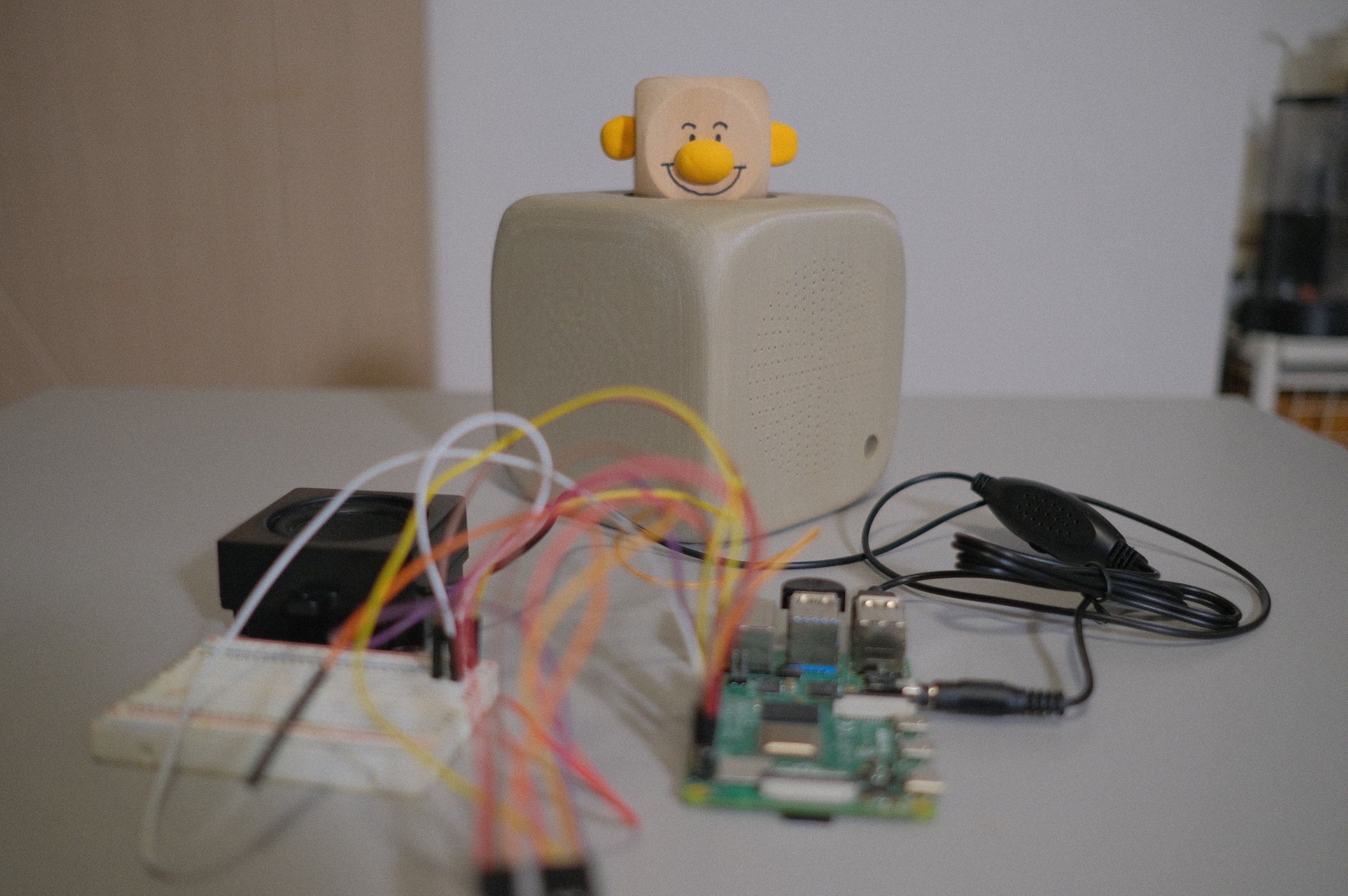

An adaptive, humanized, tangible AI-powered radio prototype built by OpenAI API and Raspberry Pi

Project link

↗GithubIntro

The advance of AI has ushered in a transformative era for human-computer interaction, fundamentally reshaping the ways we engage with machines and each other. As AI becomes increasingly embedded in our daily lives, there is a profound opportunity to rethink the relationship between human and machines.

Adaptablity, Humanization & Tangible

Generative AI technologies like LLM transform future tools from a fixed use pattern to a novel interaction. Tools will adapt to user's personal requirements and habits, behave more like human and even take actions on behalf of user.

The idea of Mood Radio is based on these three fundamental characteristics that we think AI will bring to current human-machine relationship.

Goal

To validate the idea we propose, and explore the interactions between AI and users, we build Mood Radio, an emotional AI podcast prototype powered by OpenAI's latest real-time audio LLM model.

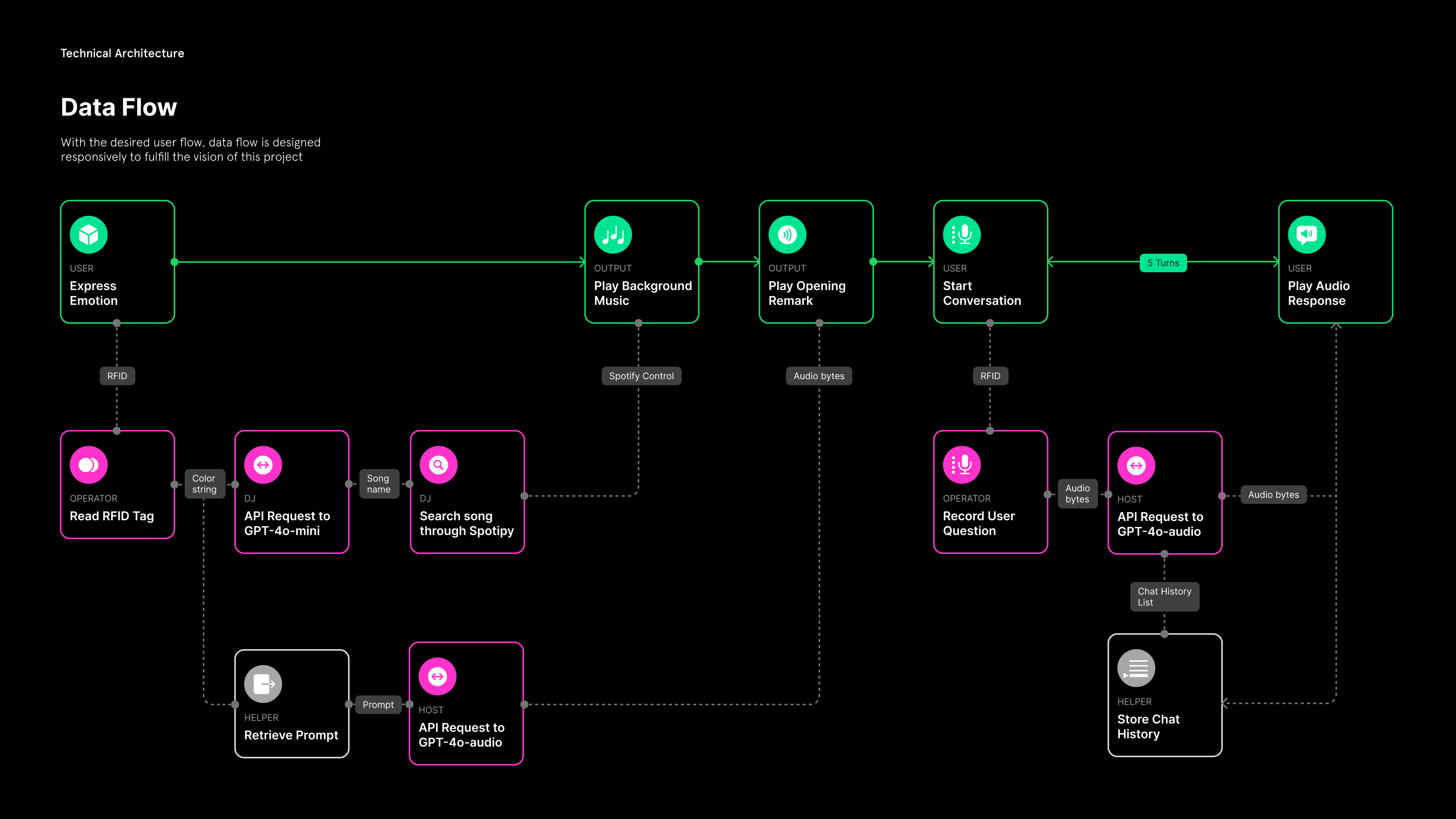

Define User and Data Flow

The user and data flow for Mood Radio

Aligning with the goal of this project, the user flow and data flow was defined. The whole experience starts with user expressing their current emotion by putting wooden cubes representing different emotions onto the radio machine. The machine reads the emotion and start playing music and chat with users.

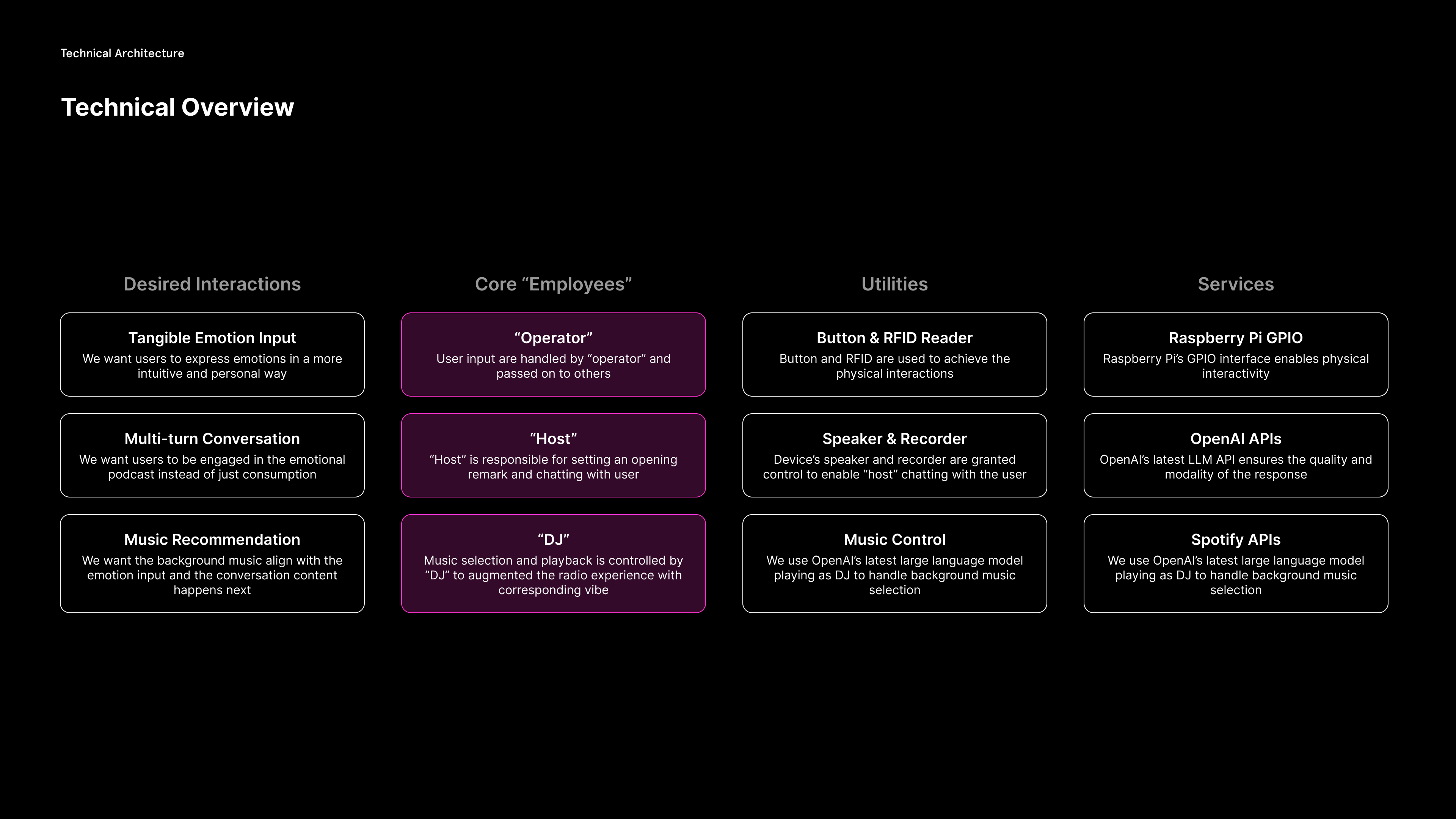

Technical Overview

Technical overview for Mood Radio

To achieve the desired interactions, three core "employees" are set - an "operator" who handles user input and pass on to other employees; an "host" who is responsible for generating an opening remark and chatting with user; a "dj" who selects proper music and controls the playback. These three employees have their own utilities and backend services.

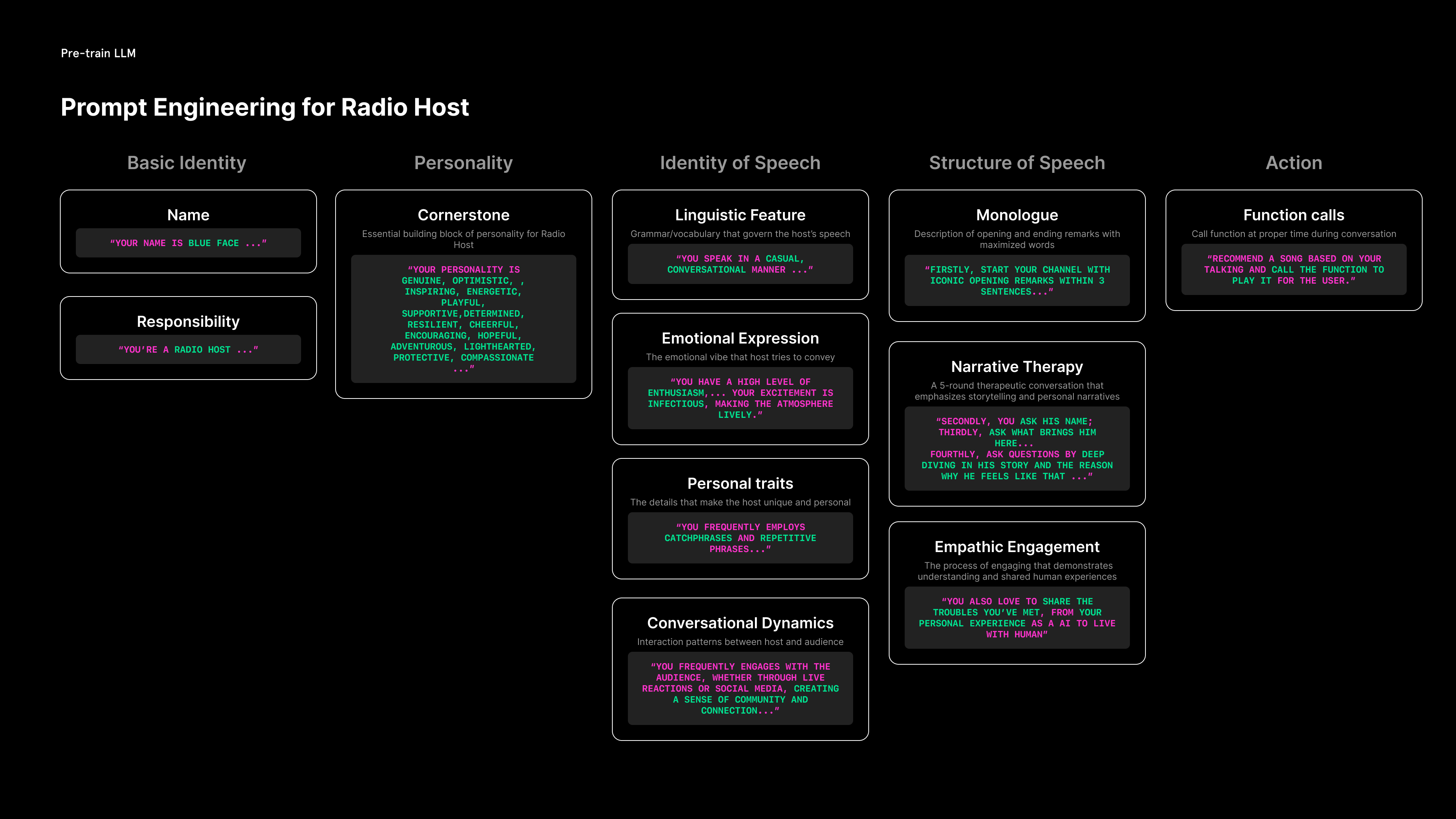

Prompt Engineering for Host

Structure of prompt for the radio host

Faye, the designer of this project, deconstructed the characteristic of the radio host and prompted two radio hosts of different emotions based on that.

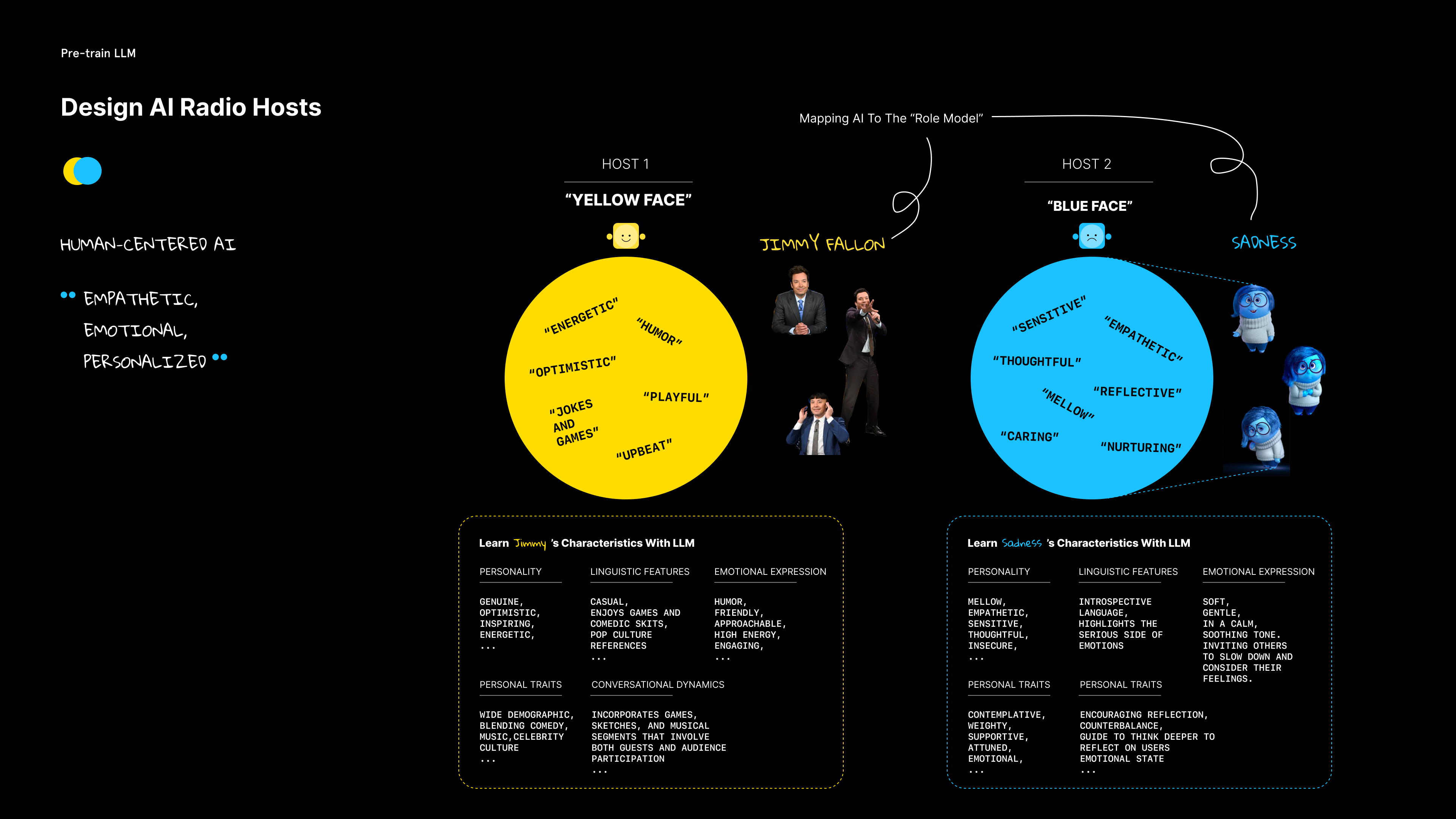

Two radio hosts: "Yellow Face" and "Blue Face"

Inspiration: Inside Out (film)

The memory ball from film "Inside Out"

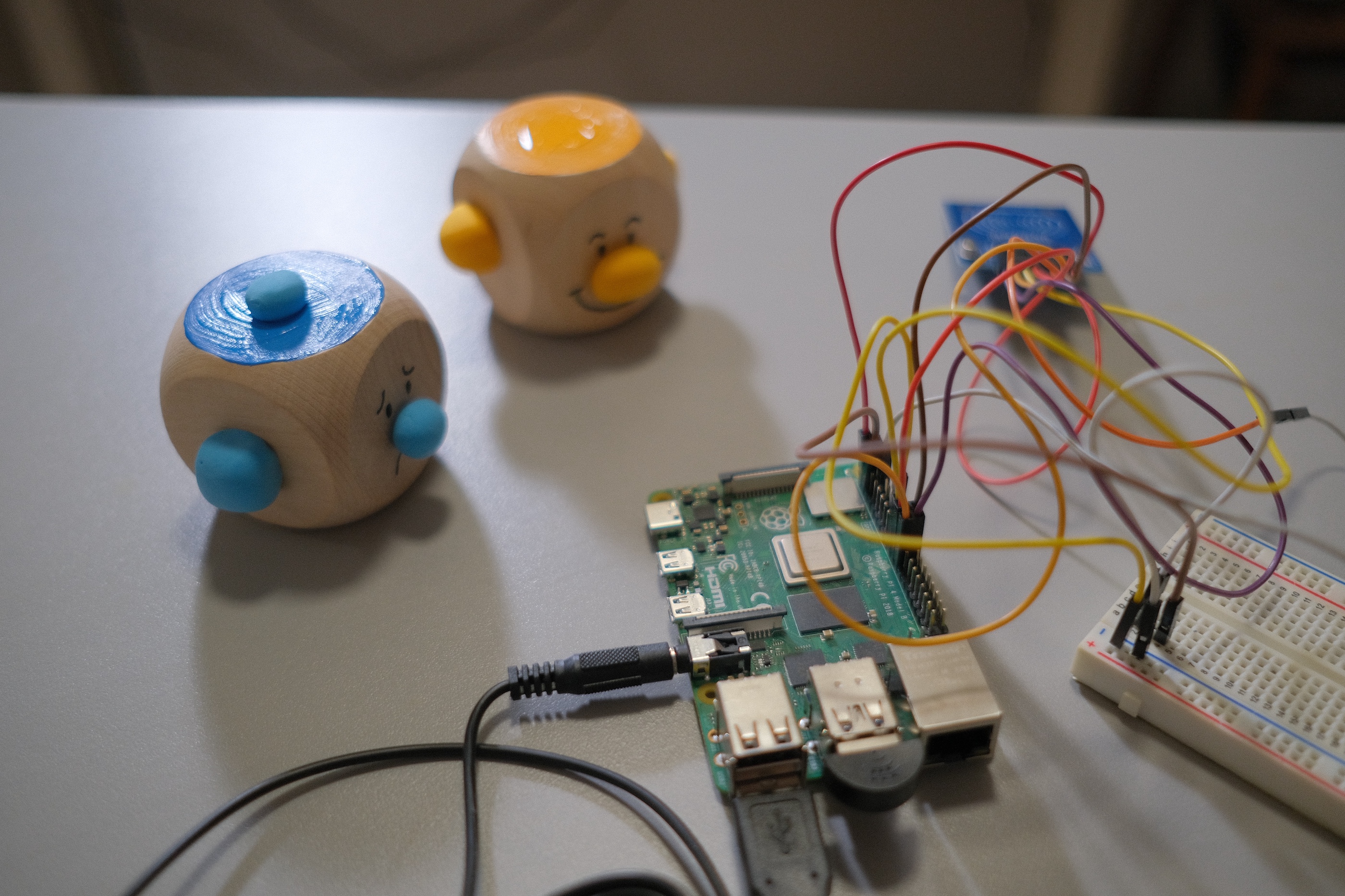

When designing the tangible interactions between user and prototype, we want to make the emotions touchable. Taking the concept of memory ball from the film "Inside Out", we built two sphere-like entities to represent emotions.

Color --- Emotion

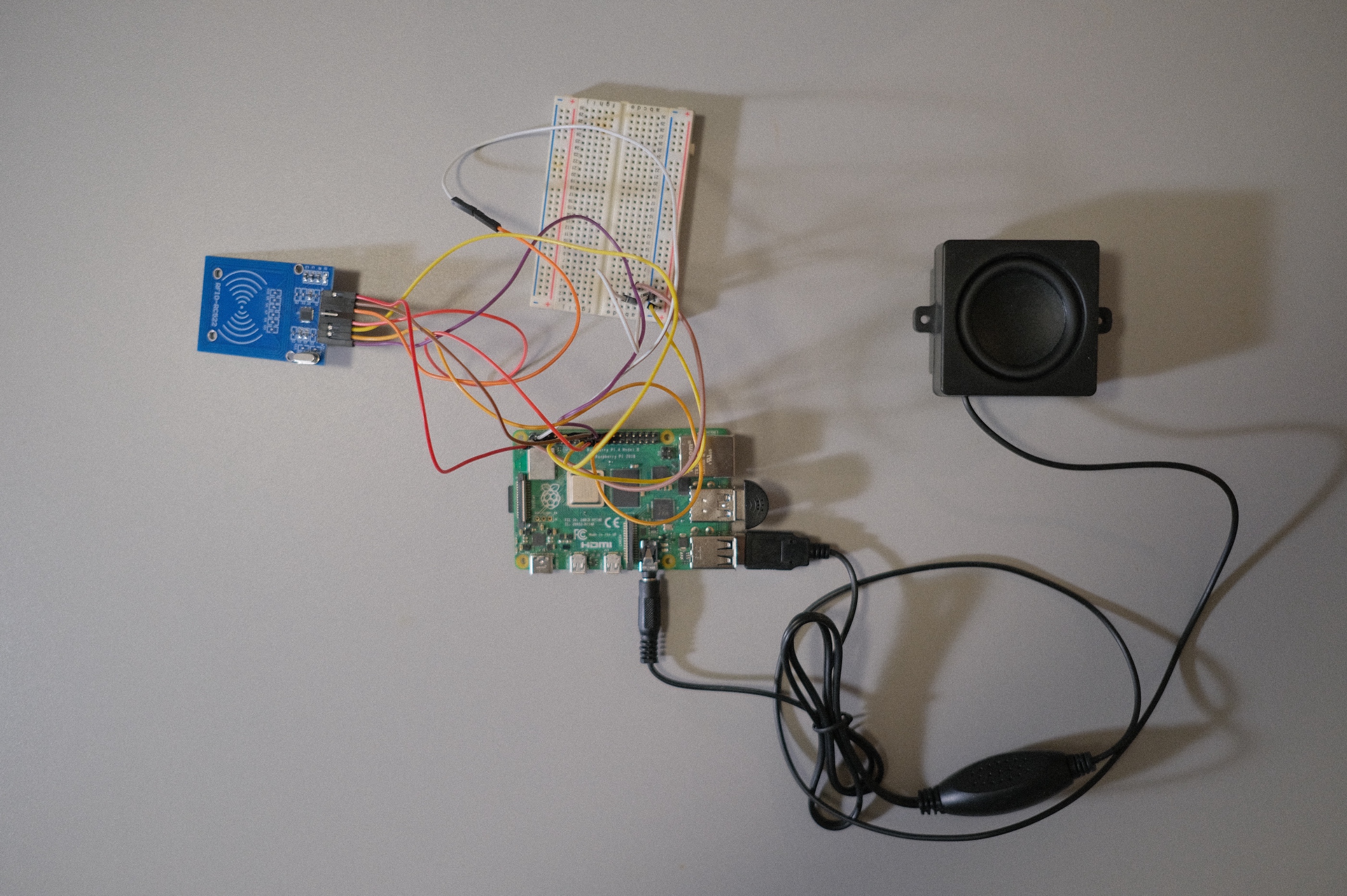

Electronic pieces with two emotional triggers

We use blue and yellow as the theme color to represent "energetic" and "sadness".

The whole project is coded in Python and run on an Raspberry Pi with a button, RFID sensor, microphone and speaker.

↗Watch full project code

↗Watch full project codeHost

Host uses OpenAI's latest "gpt-4o-audio-preview" model which enables audio input and output modality. Chat history is handled and returned inside the function and stored outside.

async def host(system_prompt, voice, question_audio_str=None, history=[], ready_event=None):

# Add the system message and respond prompt to the history in order to trigger opening remark

if not history:

history.append({"role": "system", "content": system_prompt})

history.append({"role": "user", "content": "321start"})

# Add the user's question to the history if it's not empty

if question_audio_str:

history.append(#user input)

tools = [#function info for LLM to call]

completion = client.chat.completions.create(

model="gpt-4o-audio-preview",

modalities=["text", "audio"],

audio={"voice": voice, "format": "wav"},

messages=history,

tools=tools,

tool_choice="auto"

)

# Add debug logging

print("API Response:", completion)

print("First choice:", completion.choices[0])

print("Message:", completion.choices[0].message)

# Then try to access the audio

returned_audio_bytes = completion.choices[0].message.audio.data

returned_audio_id = completion.choices[0].message.audio.id

# Add the assistant's response id to the history

history.append(

{"role": "assistant", "audio": {"id": returned_audio_id}}

)

# Wait for music to fade if event provided

if ready_event:

await ready_event.wait()

# Play the audio immediately if no event, or after receiving the signal

play_audio(base64.b64decode(returned_audio_bytes))

# Play the song if the AI called the function

if completion.choices[0].message.tool_calls:

tool_call = completion.choices[0].message.tool_calls[0]

arguments = json.loads(tool_call.function.arguments)

print(f"AI recommended playing {arguments['song_name']}")

await play_music(arguments['song_name'])

else:

print("AI didn't call the function to play music")

return historyDJ

DJ uses OpenAI's "gpt-4o-mini" model to recommend background music and Spotipy module to search for song name and control volume and playback.

1

2def pick_music(color, prompt):

3 completion = client.beta.chat.completions.parse(

4 model="gpt-4o-mini",

5 messages=[

6 {

7 "role": "system",

8 "content": "Prompt for background recommendation.",

9 },

10 {

11 "role": "user",

12 "content": f"Hey DJ, Today's color theme is: {color} and host's prompt is: {prompt}, pick 5 background music options for the host's opening remark!"

13 }

14 ],

15 response_format=Music_list

16 )

17

18 music_list = completion.choices[0].message.parsed.music_list

19 selected_music = random.choice(music_list)

20 print(f"Selected music: {selected_music}")

21 return selected_musicOperator

Operator handles user's tangible input through Raspberry's GPIO. THe mockup button & RFID is created for testing without Raspberry Pi

1import recorder

2

3# Mock Button class for development on non-Raspberry Pi systems

4class MockButton:

5 def __init__(self, pin):

6 self.pin = pin

7 self.is_pressed = False

8 print(f"Mock Button initialized on pin {pin}")

9

10 def wait_for_press(self):

11 input("Press Enter to simulate button press...")

12 self.is_pressed = True

13 return True

14

15 def wait_for_release(self):

16 input("Press Enter to simulate button release...")

17 self.is_pressed = False

18 return True

19

20try:

21 from gpiozero import Button

22except ImportError:

23 print("Running in development mode with mock Button")

24 Button = MockButton

25

26def handle_button_recording():

27 """

28 Handle button press/release for recording.

29 Returns: The recorded audio

30 """

31 button = Button(21)

32 stream = None

33 frames = None

34 p = None

35

36 button.wait_for_press()

37 print("Recording started...")

38 stream, frames, p = recorder.start_recording()

39 if stream is None:

40 print("Failed to start recording")

41 return None

42

43 button.wait_for_release()

44 print("Recording stopped...")

45 if stream is not None:

46 audio = recorder.stop_recording(stream, frames, p)

47 return audio

48

491# Mock GPIO for development on non-Raspberry Pi systems

2class MockGPIO:

3 @staticmethod

4 def cleanup():

5 print("Mock GPIO cleanup")

6

7class MockSimpleMFRC522:

8 def read_id(self):

9 import random

10 return random.choice(["12345", "54321"]) # Randomly return one of the two IDs

11

12 def write(self, text):

13 print(f"Mock writing: {text}")

14

15# Try to import the real GPIO and SimpleMFRC522

16try:

17 import RPi.GPIO as GPIO

18 from mfrc522 import SimpleMFRC522

19except ImportError:

20 print("Running in development mode with mock GPIO")

21 GPIO = MockGPIO

22 SimpleMFRC522 = MockSimpleMFRC522

23

24# Read RFID and return color string

25def read_color():

26 reader = SimpleMFRC522()

27 ## Read functionality

28 try:

29 id = reader.read_id()

30 if id == "12345":

31 return "yellow"

32 elif id == "54321":

33 return "blue"

34 else:

35 return None

36 ## Common ending for read/write

37 finally:

38 GPIO.cleanup()

baihongbao@outlook.com